The idea of tracking motion on a computer using a video camera has been around a couple of decades, and still is not fully perfect, because the construction of vision is a complex subject. We don't just "see"; we construct colors, edges, objects, depth, and other aspects of vision from the light that reaches our retinas. If you want to program a computer to see in the same way, it has to have subroutines that define the characteristics of vision and allow it to distinguish those characteristics in the array of pixels that comes from a camera. For more on that, see Visual Intelligence: How We Create What We Seeby Donald Hoffman. There are many other texts on the subject, but his is a nice popular introduction. What follows is a very brief introduction to some of the basic concepts behind computer vision and video manipulation.There are a number of toolkits available for getting data from a camera and manipulating it. They vary from very high-level simple graphical tools to low-level tools that allow you to manipulate the pixels directly. Which one you need depends on what you want to do. Regardless of your application, the first step is always the same: you get the pixels from the camera in an array of numbers, one frame at a time, and do things with the array. Typically, your array is a list of numbers, including the location, and the relative levels or red, green, and blue light at that location.

There are a few popular applications that people tend to develop when they attach a camera to a computer:

Video manipulation takes the image from the camera, changes it somehow, and re-presents it to the viewer in changed form. In this case, the computer doesn't need to be able to interpret objects in the image, because you're basically just applying filters, not unlike Photoshop filters.

Tracking looks for a blob of pixels that's unique, perhaps the brightest blob, or the reddest blob, and tracks its location over a series of frames. Tracking can be complicated, because the brightest blob from one frame to another might not be produced by the same object.

Object recognition looks for a blob that matches a particular pattern, like a face, identifies that blob as an object, and keeps track of its location over time. Object recognition is the hardest of all three applications, because it involves both tracking and pattern recognition. If the object rotates, or if its colors shift because of a lighting change, or it gets smaller as it moves away from the camera, the computer has to be programmed to compensate. If it's not, it may fail to "see" the object, even though it's still there.

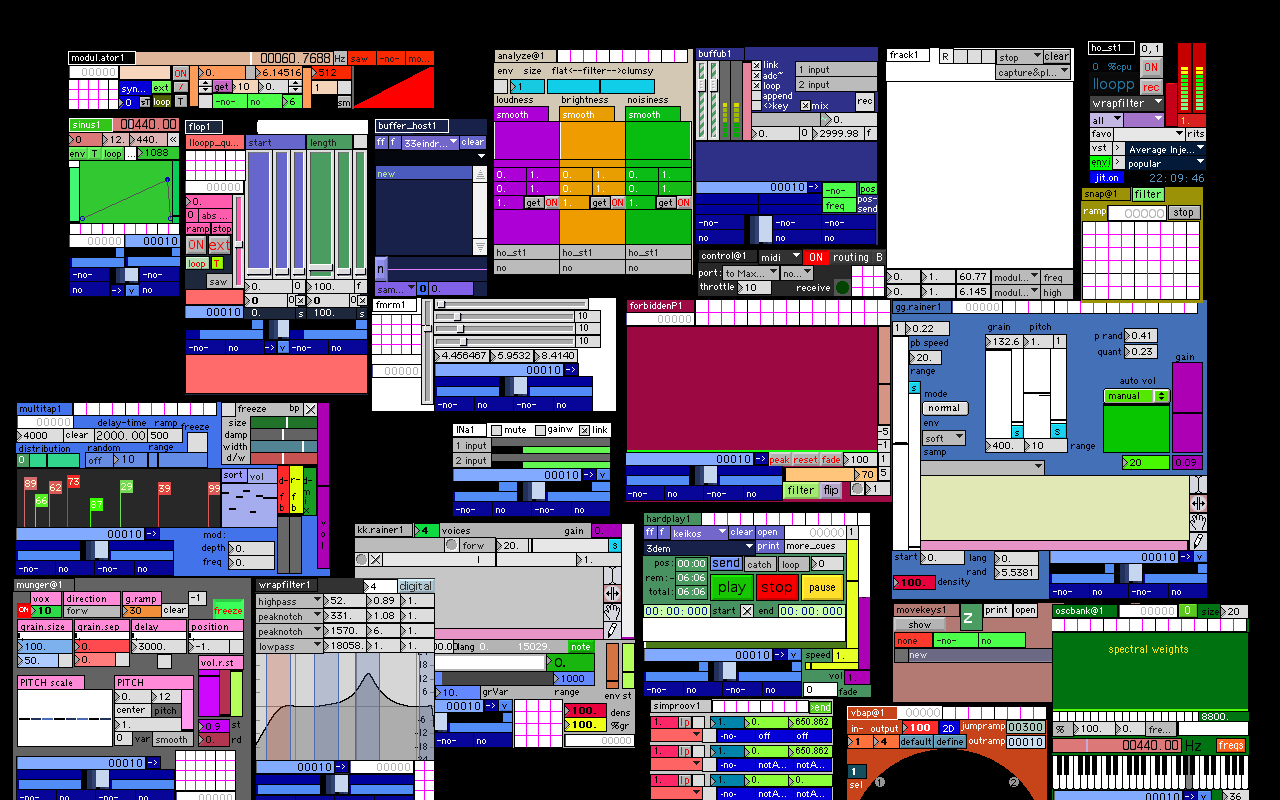

There are a number of programs available for video manipulation.Jitter, a plugin for Max/MSP, is a popular one. David Rokeby'ssoftVNS is another plugin for Max. Mark Coniglio's Isadora is a visual programming environment like Max/MSP that's dedicated to video control, optimized for live events like dance and theatre. Image/ine is similar to Isadora, though aging, as it hasn't been updated in a couple of years. There also countless VJ packages that will let you manipulate live video. In addition, most text-based programming languages have toolkits too. Danny Rozin's TrackThemColors Prodoes the job for Macromedia Director MX, as does Josh Nimoy'sMyron. Myron also works for Processing. Dan O'Sullivan's vbp does the job for Java. Dan has an excellent site on the subject as well, with many more links. He's also got a simple example for Processing on his site. Almost all of these toolkits can handle video tracking as well.

There are two methods you'll comm,only find in video tracking software: the zone approach and the blob approach. Software such as softVNS or Eric Singer's Cyclops or cv.jit (a plugin for jitter that affords video tracking) take the zone approach. They map the video image into zones, and give you information about the amount of change in each zone from frame to frame. This is useful if your camera is in a fixed location, and you want fixed zones of that trigger activity. Eric has a good example on his site in which he uses Cyclops to play virtual drums. The zone approach makes it difficult to track objects across an image, however. TrackThemColors and Myron are examples of the blob approach, in that they return information about unique blobs within the image, making it easier to track an object moving across an image.

At the most basic level, a computer can tell you a pixel's position, and its color (if you are using a color camera). From those facts, other information can be determined:

- The brightest pixel can be determined by seeing which pixel has the highest color values;

- A "blob" of color can be determined by choosing a starting color, setting a range of variation, and checking the neighboring pixels of a selected pixel to see if they are in the range of variation.

- Areas of change can be determined by comparing one frame of video with a previous frame, and seeing which pixels have the most significantly different color values.

- Areas of pattern can be followed by selecting an area to track, and continuing to search for areas that match the pattern of pixels selected. Again, a range of variation can be set to allow for "fuzziness"

A few practical principles follow from this:

Colors to be tracked need consistent lighting. The computer can't tell if my shirt is red, for example; it can tell that one pixel or a range of pixels contains the color value [255,0,0] perhaps, but if the lighting changes and my shirt appears gray because there is no red light for it to reflect, the computer will no longer "see" it as red

Shapes to be tracked need to stay somewhat consistent in shape. The computer doesn't have stereoscopic vision (two eyes that allow us to determine depth by comparing the difference in image that our two eyes receive), so it sees everything as flat. If your hand turns sideways with respect to the camera, the pattern changes because your hand appears thinner. So the computer may no longer recognize your hand as your hand.

One simple way of getting consistent tracking is to reduce the amount of information the computer has to track. For example, if the camera is equipped with an infrared filter, it will see only infrared light. This is very useful, since incandescent sources (lightbulbs with filaments) give off infrared, whereas fluorescent sources don't. Furthermore, the human body doesn't give off infrared light either. This is also useful for tracking in front of a projection, since the image from most LCD projectors contains no infrared light.

When considering where to position the camera, consider what information you want to track. For example, if you want to track a viewer's motion in two dimensions across a floor, then positioning a camera in front of the viewer may not be the best choice. Consider ways of positioning the camera overhead, or underneath the viewer.

Often it is useful to put the tracking camera behind the projection surface, and use a translucent screen, and track what changes on the surface of the screen. This way, the viewer can "draw" with light or darkness on the screen.

출처 : http://www.tigoe.net/pcomp/videoTrack.shtml

invalid-file

invalid-file

drawingforvdmx3.qtz

drawingforvdmx3.qtz